01

03

TECHNOLOGY 技術核心

我們以「影像處理」作為技術發展核心,並以光電產品作為技術發展平台。

研發產品從影像擷取、影像儲存、影像顯示至影像通訊等傳輸領域,都是藉此思維讓不同產品及應用達到商品化。

我們為客戶提供的包括外觀、機構、軟體、硬體、韌體 ( Firmware ) 等技術解決方案 ( Total Solution ),與一次購足 ( One Stop Shopping ) 的服務。

影像顯示

影像顯示的高解析 ( HD ) 智能顯示器、互聯網電視 ( Internet TV )。

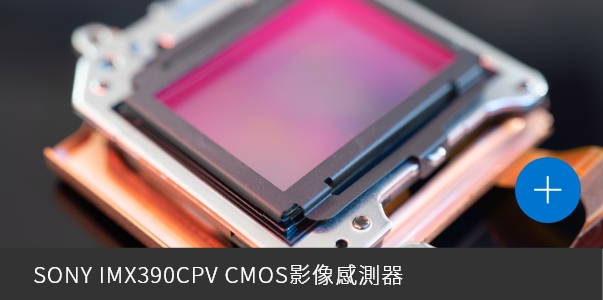

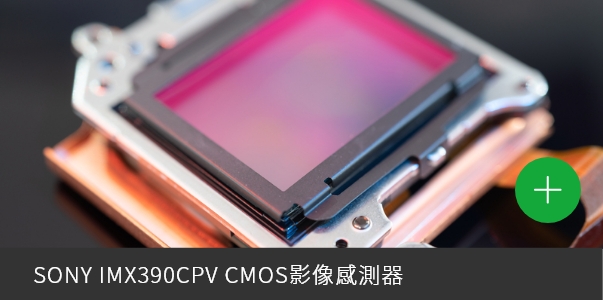

影像擷取

CCD、CMOS類比、數位高解析(HD)智能攝影機。

影像儲存

藍光、H.264 數位機上盒(IPSet Top Box)、智能數位電視機上盒(IP Smart TV Box)。

影像通訊

Wi-Fi、 RF及 3GPP。

.png)

.png)